Introduction

If you're building voice agents on Vapi or Retell, you already know the drill. You make a prompt change, spin up a test call, listen to the entire conversation, take notes, then repeat. Maybe you rope in a few team members to help cover different scenarios. After a week of this, you've tested maybe 50 variations if you're lucky.

Here's the math that should make you uncomfortable: 100 test scenarios at 5 minutes per call across 3 testing iterations equals 25+ hours per cycle. That's more than three full workdays of someone literally talking to your bot. And that's assuming everything goes smoothly, no one gets tired, and your testing stays consistent from call one to call one hundred.

But humans aren't built for this kind of repetitive testing. By call 30, your QA engineer is mentally checked out, rushing through scripts, and missing the edge cases that will break your agent in production. Research shows that 78% of voice AI failures happen in edge cases that manual testing never caught. You're spending weeks testing happy paths while real users hit your agent with background noise, strong accents, interruptions, and questions you never planned for.

The real cost isn't just the time. It's what your engineering team isn't building while they're stuck doing manual QA. Every hour spent making test calls is an hour not spent on features, integrations, or actually shipping your product. Teams report testing and iteration cycles taking weeks when they rely on manual methods, creating a significant bottleneck that slows down the entire development process.

In this guide, we'll show you how to test 10,000 voice agent scenarios in minutes without manual QA using Future AGI. Instead of making hundreds of calls yourself, you'll run automated voice AI testing at scale, catch critical failures before production, and free up your team to focus on what actually matters: building a voice agent that works.

What “10,000 Voice Scenarios” Actually Means

When we say 10,000 test scenarios, we're not talking about running the same conversation 10,000 times. We're talking about true scenario diversity: different user personas, varying intents, multiple conversation paths, and all the edge cases that make your voice agent fail in production. This is the difference between testing if your agent works and testing if it works for everyone.

The math here is simple but powerful. Take 10 different user personas (frustrated customer, first-time caller, someone with a thick accent), multiply by 50 common intents (cancel order, check status, request refund), then add 20 variations for each (background noise, interruptions, unclear speech). You've just created 10,000 unique test scenarios that cover the real-world conditions your voice agent will face.

Here's why this level of coverage actually matters: the edge case you skip during testing is exactly the one that will break your agent when you're trying to enjoy your weekend. Production doesn't care about your happy path scenarios. Real users will hit your agent with:

Heavy accents and speech patterns you never trained for

Background noise from cars, restaurants, or crying babies

Mid-conversation topic switches that derail your flow

Rapid-fire questions before your agent finishes speaking

Vague requests that don't map cleanly to any intent

Technical issues like latency spikes or connection drops

Four Ways to Generate Test Scenarios at Scale

Future AGI supports four approaches to create test scenarios. You can use one or combine them based on your data and coverage needs.

Dataset-driven testing pulls from your existing customer data to create realistic test scenarios. If you have historical conversation logs, support tickets, or CRM data, you can extract real user profiles and actual questions people ask. This gives you test scenarios based on what customers actually say, not what you think they'll say.

Conversation graphs map every possible path a user can take through your voice flow. You start with the entry point, branch out for each decision point or intent, and track all the ways a conversation can unfold. This method catches logic errors and dead ends in your conversation design that manual testing would miss because humans naturally follow predictable paths.

Targeted scripts focus on specific edge cases and known failure modes you want to stress-test. These are the scenarios that broke your agent last week, the complaints from your support team, or the situations you know are hard for your LLM to handle. You write explicit test scripts for things like handling angry customers, dealing with ambiguous requests, or recovering from misunderstood speech.

Auto-generation using AI analyzes your voice agent's capabilities and automatically creates diverse test scenarios. The synthetic dataset generation produces thousands of variations based on your agent's configuration, intents, and conversation patterns. The AI understands what your agent is supposed to do and generates realistic user inputs that challenge those capabilities at scale.

How AI-Powered Test Agents Work

AI-powered test agents let you stress test your voice agent without sitting through endless manual calls. Here is how this works in practice with Future AGI test agents.

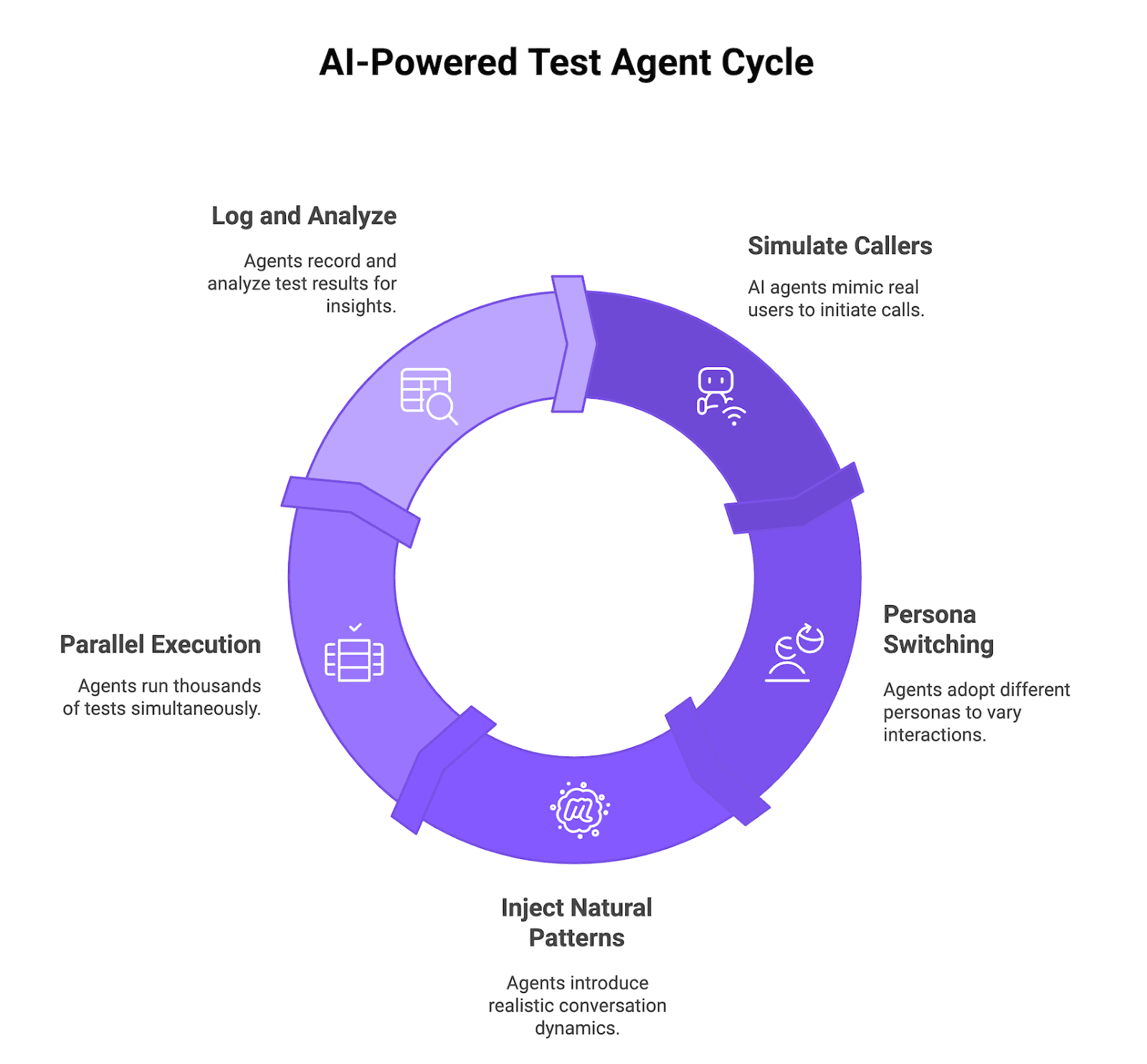

Figure 1: AI-Powered Test Agent Cycle

4.1 Simulated callers that behave like real users

Future AGI uses AI-driven test agents that act like simulated callers, placing inbound calls to your voice agent or receiving outbound calls from it, just like a real user would. Whether your Vapi or Retell agent initiates the call or answers it, the test agents send audio, wait for responses, follow the conversation flow, and log every step so you can see exactly where the agent hesitates, fails, or returns a bad answer.

4.2 Multi-persona behavior: skeptical, impatient, confused, detailed

On top of basic call simulation, these test agents can switch between multiple personas such as skeptical, impatient, confused, or highly detailed callers. You can run the same scenario through different personas to see how tone, patience level, and amount of context shared change the model behavior and success rate.

4.3 Natural conversation patterns: interruptions, topic changes, clarifications

Building on that, Future AGI test agents inject natural conversation patterns that mirror messy real calls instead of clean, scripted flows. They can interrupt the agent mid sentence, change topics halfway through, ask for clarifications, or repeat questions to stress test how your system handles barge in, context shifts, and recovery from errors.

4.4 Parallel execution: Thousands of tests running simultaneously

All of this becomes useful at scale when you run thousands of these AI callers in parallel rather than one call at a time. Future AGI can spin up and coordinate large batches of voice test runs at once so you can execute 10,000 scenarios in minutes instead of spending weeks on manual QA, while still logging detailed metrics and audio for deep inspection when you need it.

Setting Up Automated Voice AI Testing With Future AGI

Getting your voice agent under test with Future AGI takes less than 10 minutes from signup to your first results. Here's the exact process, broken down step by step.

Step 1: Connect your voice agent

Future AGI connects directly to your voice agent using just your phone number. You create an agent definition in the platform, enter the phone number your agent uses, and optionally enable observability to track real production calls alongside your test runs. That's it. No SDK installation, no complex integration, just a phone number and you're ready to test.

Step 2: Define or auto-generate test scenarios

Once your agent is connected, you can upload existing customer conversation data, historical support logs, or let Future AGI's synthetic dataset generation create realistic test scenarios for you automatically. The platform analyzes your agent's intents and conversation paths to generate diverse scenarios covering edge cases you might not think to test manually.

Step 3: Configure personas and evaluation criteria

Next, you set up simulation agents with different personas like skeptical customers, impatient callers, or confused first-time users by adjusting the prompt, temperature, voice settings, and interrupt sensitivity. At the same time, you configure evaluation metrics that define success for each test, such as intent match accuracy, resolution rate, response latency, and conversation quality. Future AGI captures native audio recordings for every test call, so you can listen to exactly what happened instead of relying only on transcripts.

Step 4: Run tests and review results

Hit run, and Future AGI executes all your scenarios in parallel, capturing full audio, transcripts, latency stats, and agent behavior for every test call. Results appear in a dashboard where you can filter by failures, compare test runs over time, drill into specific conversations, and identify patterns in what's breaking your agent.

Time to first test: Under 10 minutes

From creating your account to seeing your first batch of test results, the entire setup process takes under 10 minutes.

Interpreting Results: From Test Data to Actionable Fixes

Running 10,000 tests is only useful if you can quickly spot what's broken and fix it. Future AGI gives you multiple layers of analysis to turn raw test data into concrete improvements.

6.1 Evaluation metrics: task completion, conversation quality, compliance

Future AGI lets you choose exactly which metrics matter for your specific use case, instead of forcing a one-size-fits-all scorecard. You select the evaluations you want to run for each test batch, including:

Task completion rate measures whether your voice agent actually solved what the user called about, like successfully booking an appointment, processing a refund, or answering a question correctly.

Conversation quality evaluates how natural and effective the interaction feels, tracking things like appropriate response time, coherent dialogue flow, and whether the agent understood the user's intent on the first try.

Compliance and safety catches issues like sharing sensitive information incorrectly, making claims your legal team hasn't approved, or failing to follow required scripts for regulated industries.

6.2 Failure clustering: Identify patterns across thousands of tests

Instead of reviewing 10,000 test results one by one, Future AGI groups similar failures together so you can fix entire categories of issues at once:

The platform uses AI to cluster failed conversations by root cause, showing you that 200 tests failed because of the same prompt confusion or that a specific intent consistently fails with certain phrasings.

You get failure distribution breakdowns that reveal which user personas, conversation paths, or edge cases are causing the most problems. But Future AGI goes a step further: the Auto Agent Optimizer can automatically analyze these failures and suggest specific prompt or configuration changes to fix them. Instead of just showing you what's broken, the platform helps you patch the leaks immediately so you can rerun tests and verify the fix in minutes.

6.3 Audio analysis integration: Catch latency and tone issues

Beyond just transcript accuracy, Future AGI analyzes the actual audio to catch problems that text alone misses:

Latency tracking shows where your agent is slow to respond, with detailed breakdowns of time spent on speech recognition, LLM processing, and text-to-speech generation so you know exactly what to optimize.

Tone and speech quality metrics detect when your agent sounds robotic, cuts off users mid-sentence, or has audio artifacts that hurt the user experience even when the words are technically correct.

6.4 Prioritization: Which failures matter most?

Not all test failures deserve the same attention, and Future AGI helps you rank issues by actual business impact:

The dashboard shows failure frequency and affected user count, so you can see that one bug is hitting 30% of all calls while another edge case only affects 0.5%.

Each failure gets tagged with severity based on whether it completely blocks the user, degrades experience, or is just a minor annoyance, helping you decide what to fix today versus what can wait for the next sprint.

Continuous Testing: Building Voice AI QA Into Your CI/CD

Once your test suite is stable, you can trigger Future AGI runs automatically on every staging or production deployment from GitHub Actions, GitLab CI, or any other pipeline you use. This gives your voice agent a repeatable safety check so regressions show up in a test report instead of on a live call with a customer.

Automated regression testing on every deployment: Reuse the same set of voice test scenarios and personas as a regression pack that runs on each merge or release, just like unit or integration tests for code. If task completion rate, latency, or critical flows drop below your thresholds, the pipeline can flag or even block the deployment until someone reviews the failures.

Baseline comparison to catch drift: Future AGI keeps historical runs so you can compare the latest test results against a known good baseline and see how accuracy, completion rate, and call quality have moved over time. This helps you catch performance drift from prompt changes, new model versions, or provider updates long before support tickets start to spike.

Integration with observability: By linking simulation results with voice observability, you can line up test failures with real production call traces and see whether the same patterns appear in live traffic. That connection makes it easier to decide if an issue is just a synthetic edge case or something that is already hurting actual users.

The feedback loop: Simulation → Evaluation → Optimization: Your CI job triggers simulations, Future AGI runs evals on every call, and you use the scored output to adjust prompts, flows, or routing logic, then push another change and repeat. Over a few cycles, this loop turns into a steady improvement engine that keeps your voice agent reliable as you ship new features and experiments.

Conclusion

Manual voice agent testing doesn't scale, and your team knows it. Spending weeks on repetitive test calls means slower releases, missed edge cases, and engineers stuck doing QA work instead of building features. Automated voice AI testing with Future AGI flips this entire process. You can run 10,000 diverse scenarios in minutes, catch failures before production, and build voice AI QA directly into your CI/CD pipeline. The difference between manually testing 100 happy paths and automatically stress testing 10,000 real-world scenarios is the difference between hoping your agent works and knowing it works. Stop guessing which edge cases will break your voice agent in production.

Run 1,000 test scenarios free and see what your manual QA is missing. Sign up for Future AGI and get your first batch of automated voice agent tests running in under 10 minutes.

FAQs