New Features

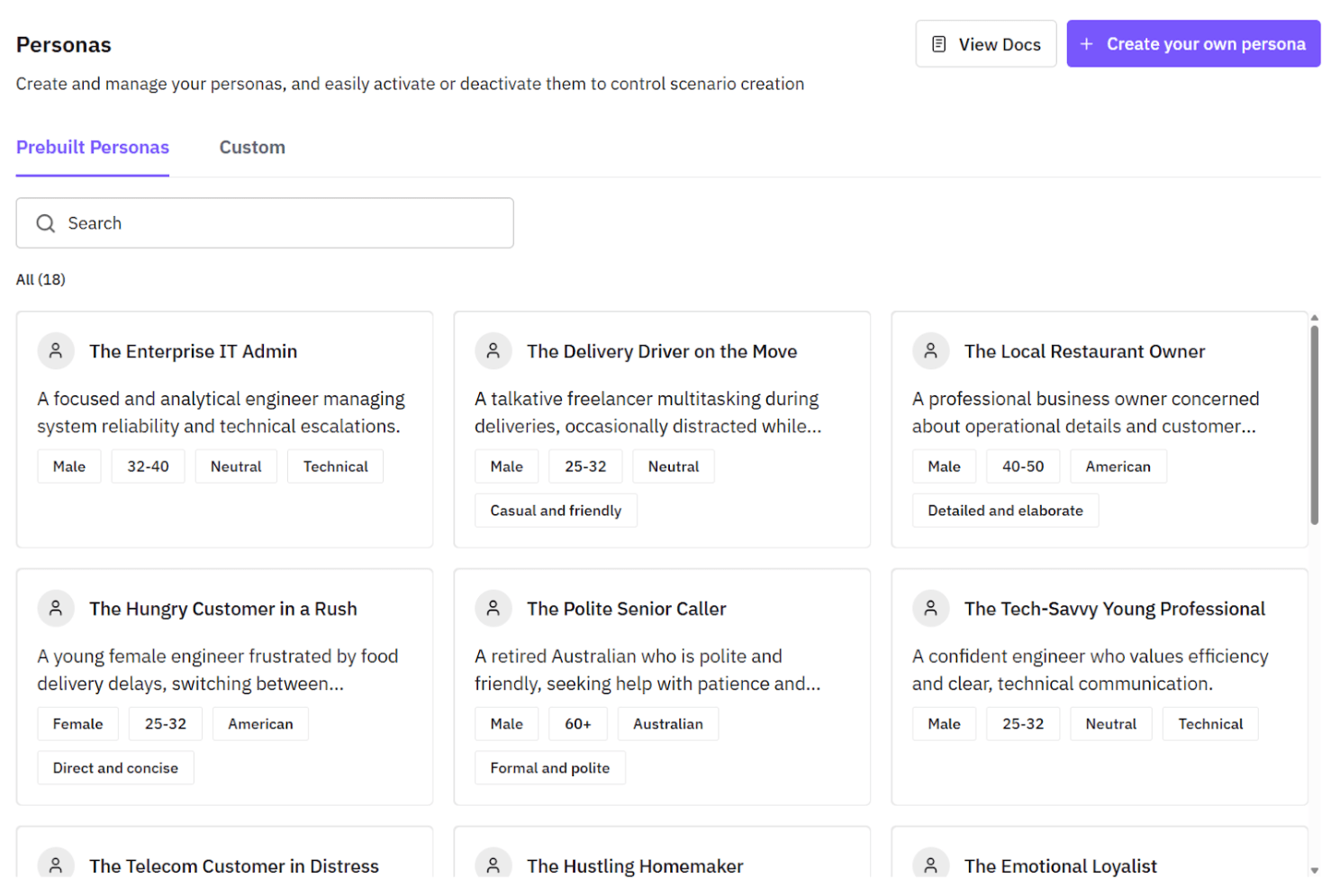

Test Your Voice Agent with Pre-built and Custom Personas

The new Persona feature in Simulate lets you test your voice agent against diverse human behaviours before production deployment.

What's included:

Pre-built personas – Ready-to-use library of common customer types

Custom personas – Define tone, emotion, and behavior patterns for your specific needs

Edge case testing – Simulate frustrated, confused, or challenging interactions

Same agent. Different humans. Completely different outcomes. Identify issues and optimize performance across real-world scenarios before going live.

👉 Define your user Persona here

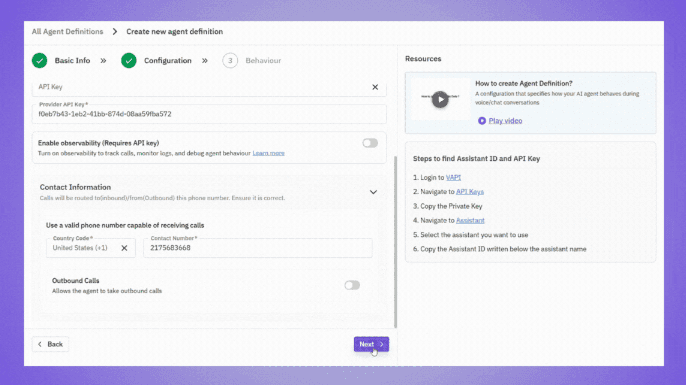

Testing for Outbound Calls

Simulate now supports outbound call testing in addition to inbound calls, letting you validate your voice agent's performance across the full call spectrum.

Few test outbound scenarios:

Follow-ups and reminders – Appointment confirmations, payment reminders, service updates

Renewals and campaigns – Subscription renewals, product launches, promotional offers

Sales and collections – Lead qualification, customer success check-ins, payment collection flows

👉 Simulate outbound calls - start here

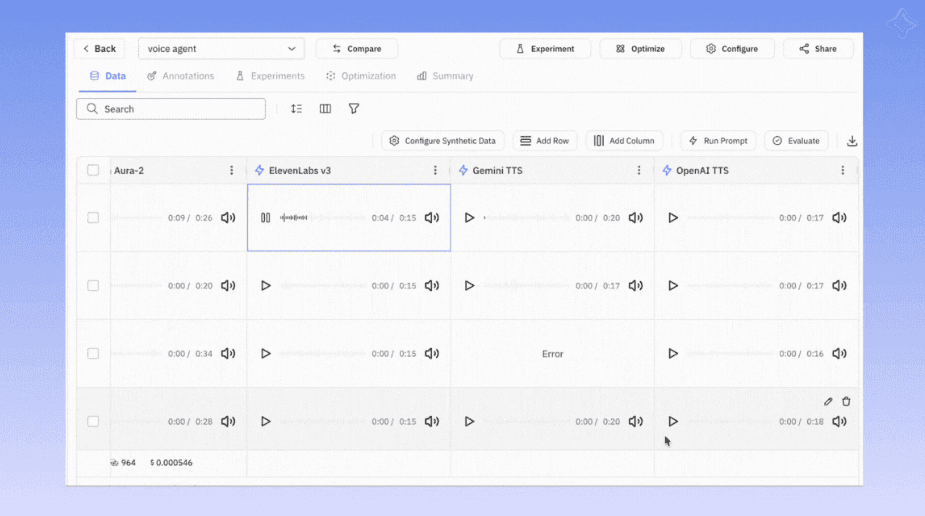

A/B Test Your Entire Voice AI Stack

The first end-to-end voice model experimentation platform that lets you A/B test your complete voice AI stack, from speech-to-text (STT) through LLM to text-to-speech (TTS).

What you can test:

Compare providers side-by-side – Evaluate OpenAI, ElevenLabs, Deepgram, and other leading providers

Measure what matters – Track transcription accuracy, audio quality, and latency across different configurations

Stop guessing which voice AI stack works best. Find the combination that delivers for your real users and specific requirements before committing to production.

👉 Compare Voice AI Models

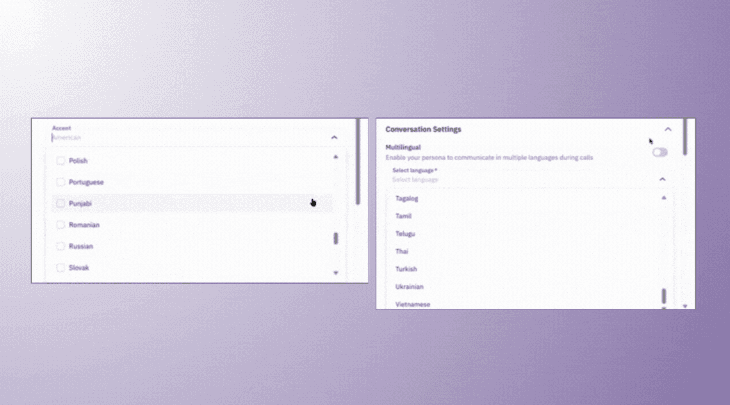

Test Across 30+ Languages and Accents

Simulate now supports multilingual and multi-accent testing, letting you validate your voice agent's performance across diverse global markets.

What's supported:

30+ languages – Test conversations in Arabic, Hindi, English, Spanish, and more

Regional accent variations – Validate understanding across different accents within the same language

End-to-end evaluation – Assess transcription accuracy, prosody, and intent recognition with realistic ASR/TTS pipelines

Ensure your voice agent delivers consistent, high-quality experiences for users across every region and language before launching in new markets.

👉 Try for free

New Integrations

Two major integrations that bring Future AGI's observability into the voice AI ecosystems you're already building in:

Voice Observability for LiveKit Agents [SDK] : Our TraceAI library now supports voice agents built with LiveKit. Capture spans, events, audio metrics, and tool interactions in a single trace. Identify latency spikes and debug problematic call segments without guesswork, built for voice AI engineers, QA, and PM teams who need transparency into agent behavior at scale.

Retell Voice Observability : Real-time production monitoring for Retell-based voice agents is here. Enable full observability for Retell calls with trace-level visibility, real-time debugging, and audio interaction insights, all within the same unified workspace you use for the rest of your voice AI stack.

Future AGI x n8n

Enterprise prompt infrastructure meets workflow automation. Our official n8n community node eliminates manual prompt handling across your workflows.

What all can you automate:

Centralized prompt management – Create, test, and version prompts in Future AGI, then pull them into n8n by name

Smart template variables – Map workflow data (customer name, context, history) directly into prompt variables

Built-in observability and evals – Every prompt execution automatically logged with performance tracking

Version control that works – Switch between production, staging, or any version instantly without editing workflows

Add the Future AGI node → Pull prompt by name → Map workflow data = Done. 80% less time managing prompts. 100% focused on building agents.

Knowledge Nuggets

Free eBook - Mastering AI Agent Evaluation

AI agents are simple to build but risky to deploy without proper testing. This playbook gives you a complete evaluation framework to make agents reliable in production, covering failure modes, testing infrastructure, experiments, and monitoring. Built for teams deploying voice, image, or RAG agents in high-stakes domains like support, finance, healthcare, and legal.

🔗 Get your free copy here.

Weekly AMAs to “Fix your Agent”

Join our weekly open office hours where Future AGI's engineering team helps you tackle real-world agent challenges. Bring your debugging questions, evaluation dilemmas, and production headaches - whether you're building RAG systems, voice agents, or any other agentic application.

🔗 Register for the series - click here!

Webinar on ‘Building AI-Native Interfaces’

Watch a live demo of AG-UI, an open protocol for building AI-native interfaces that stream and adapt in real-time based on agent behavior. Explore real-world examples of multi-agent workflows and vendor-neutral messaging across frameworks.

🔗 Watch or save for later - click here!

We Were at /function1 in Dubai

We brought Future AGI to /function1 in Dubai, connecting with founders, engineers, and researchers focused on moving AI from prototypes to reliable production systems. Huge thanks to everyone who joined us to discuss agents, observability, and what it takes to ship AI that actually works in the real world.

December's here, and we're hoping to close out the year with some Christmas cheer 🎄 and a lot of reliable agents shipped to production. Whether you're wrapping up builds or planning for 2026, here's to the momentum carrying forward.

Facing tricky AI problems? Slide into our DMs and share the challenges you’re tackling, let’s brainstorm solutions together.

🗓️ Book a free demo or schedule a call to see our platform in action!

Your partner in building Trustworthy AI!