Overview

Effective meeting summarization is critical for organizations to streamline communication and maintain productivity. Various AI models generate summaries, but selecting the best-performing model requires systematic evaluation based on specific metrics.

Future AGI provides a robust evaluation platform, accessible via its dashboard and SDK, enabling organizations to:

Compare AI-generated meeting summaries across multiple models.

Evaluate summaries based on relevance, coherence, brevity, and coverage.

Identify the best-performing model through actionable metrics.

Gain insights through a detailed dashboard.

This case study demonstrates how Future AGI’s SDK empowers organizations to optimize meeting summarization workflows efficiently.

Problem Statement

An organization utilizes multiple AI models for generating meeting summaries. The workflow involves:

Generating summaries from different models.

Comparing and evaluating summaries manually.

Challenges:

Subjective Evaluation: Human biases in assessing summaries lead to inconsistent results.

Scalability Issues: Evaluating outputs from multiple models is time-consuming.

Lack of Metrics: No systematic metrics for evaluating summary quality.

Solution Provided by Future AGI

Future AGI offers an automated evaluation framework to overcome these challenges. Here’s how:

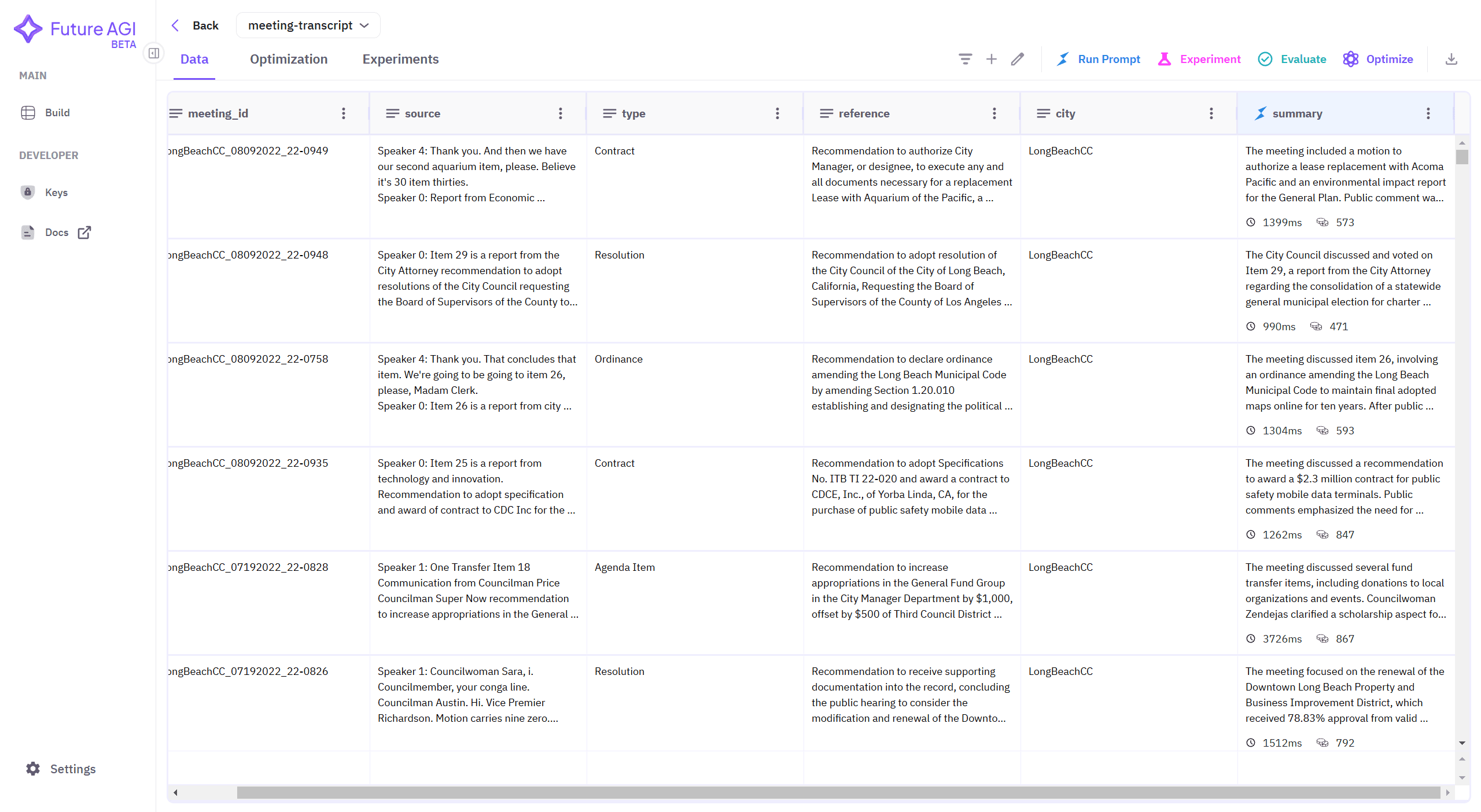

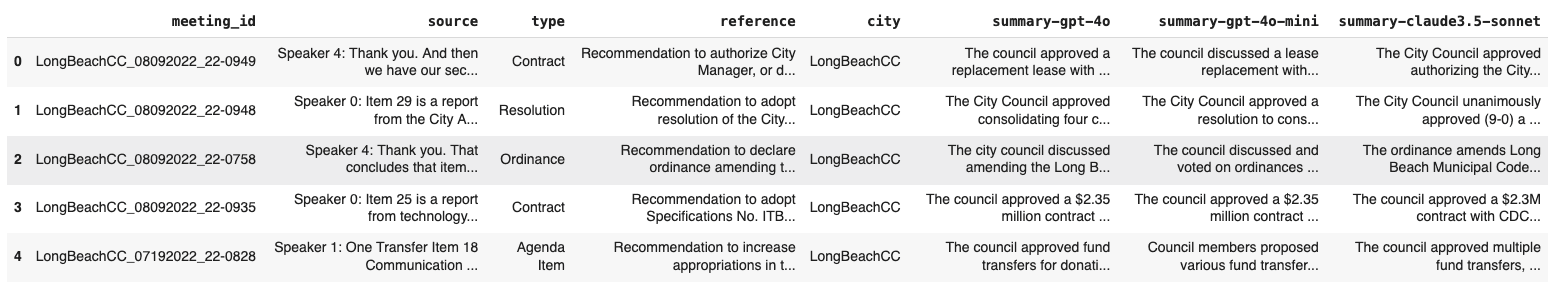

1. Generating Summaries from Multiple Models

Meeting transcripts were processed through various AI models to obtain summaries. These summaries formed the input for the evaluation framework.

2. Initializing Future AGI’s Evaluator Client

The evaluator client enables automated evaluation of model outputs based on pre-defined metrics.

Code Snippet:

3. Defining Evaluation Criteria

The summaries were evaluated using deterministic tests based on the following criteria:

Relevance: Does the summary cover the key points?

Coherence: Is the summary logically structured?

Brevity: Is the summary concise without losing critical information?

Coverage: Does the summary include all important topics?

FutureAGI also provides

SummaryQualitymetric that allows you to access the overall quality of the summary.

Code Snippet:

4. Performing Evaluation

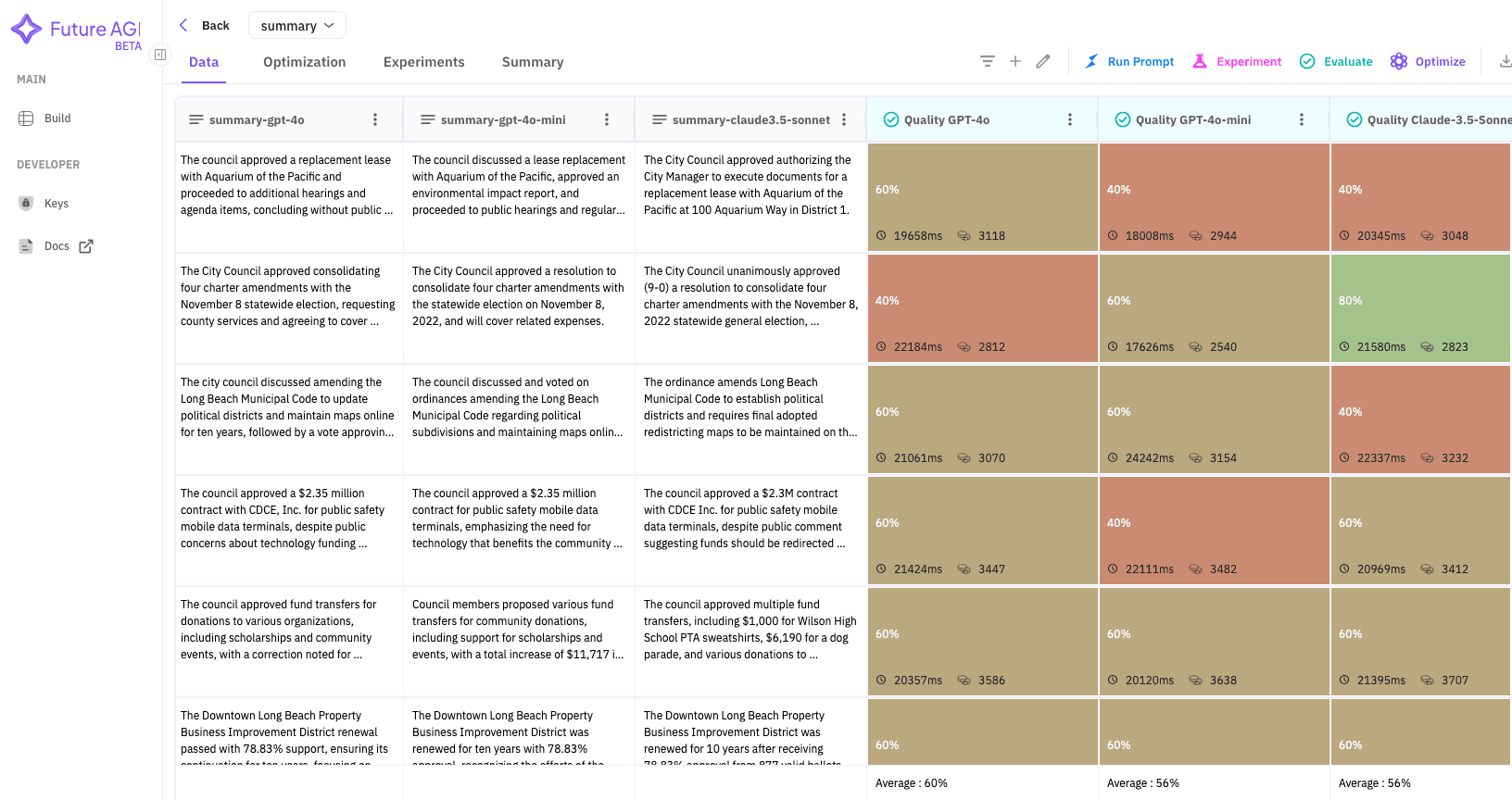

Each summary was evaluated against the criteria using Future AGI’s deterministic evaluation module and the SummaryQuality metric.

Code Snippet:

5. Comparing Models

The evaluation scores for each model’s summaries were aggregated to identify the best-performing model.

Code Snippet:

Results and Dashboard Integration

The evaluation results were visualized on the Future AGI dashboard, showcasing:

Distribution of evaluation scores for each model.

Comparison of metrics like relevance, coherence, brevity, and coverage.

Identification of the top-performing model.

Key Outcomes

Streamlined Evaluation: Reduced manual effort in assessing summaries by 90%.

Objective Scoring: Eliminated subjectivity with usage of consistent metrics.

Improved Summarization Quality: Enabled data-driven selection of the best model.

Efficiency: Increased internal team efficiency by 20% through enhanced model selection for generating summaries.

Scalability: Evaluated 10x more summaries within the same timeframe, enabling faster iterations and experimentation.

Conclusion

Future AGI’s evaluation framework revolutionizes the assessment of AI-generated meeting summaries. By automating the evaluation process and providing actionable insights, it empowers organizations to select the most effective models, enhance communication, and achieve measurable improvements in productivity.