Introduction

Generative AI is changing everything both our work lives and day-to-day routines at lightning speed. When we say “generative AI,” we mean those systems that whip up fresh content like words, pictures, even videos from scratch. You might remember GPT-3 or GPT-4 for text or DALL·E and Stable Diffusion for images more recently, Google’s Gemini has pushed things even further. By 2025, generative AI hit a real sweet spot. Thanks to smarter model designs, these tools now run faster and need far less computing horsepower.

Over the past few years, we’ve seen mind-blowing progress. ChatGPT showed everyone that AI could have a genuinely useful back-and-forth with a person. Then tools like Stable Diffusion and DALL·E dazzled us by crafting detailed images from nothing more than a typed description. Meanwhile, the next generation Gemini and GPT-4 kept pushing the needle on writing clarity and understanding.

In this piece, we’re going to peel back the layers: first, we’ll dig into the nuts and bolts of what makes generative AI tick and finally share practical tips on how to scale and plug these tools into whatever field you’re working in.

Generative AI Trends for 2026

Trend 1: Emergence of Agentic AI

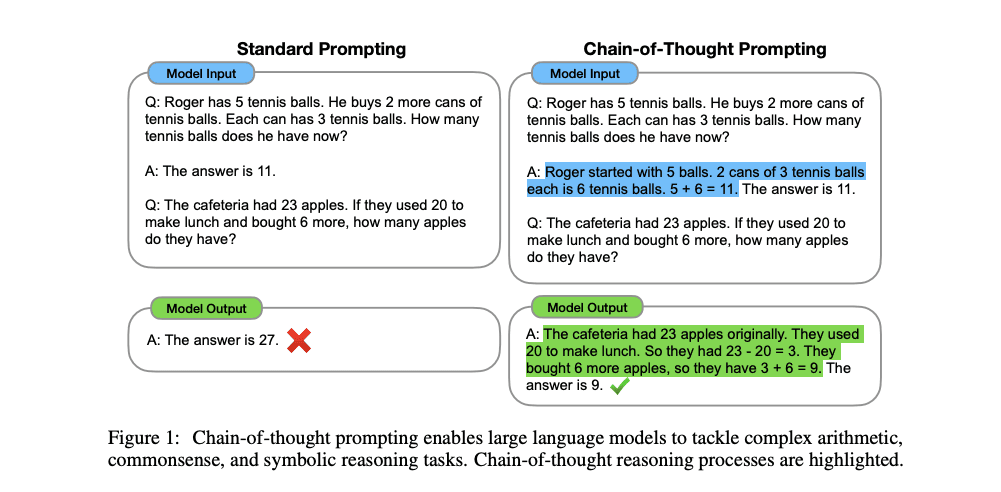

In 2026, AI is more than just chatbots that spit out text. It's also about intelligent agents that can think for themselves, use planning modules to make big-picture plans, and chain-of-thought prompting to break down difficult tasks into smaller ones.

These agents can "talk" to IoT devices over the internet, coordinating everything from smart thermostats to factory machinery in a way that feels natural and easy to understand.

Image 1: Chain-of-Thought Prompting

The best part? Routine workflows that used to require a lot of clicking by people are now fully automated, which gives people more time to make more important decisions.

At the same time, humans and AI are teaming up in new ways: experts assign the heavy, multi‐step chores to agents and focus on strategic thinking, while AI handles the basic work.

That shift is rewriting how businesses operate departments get more autonomy, processes become more transparent, and companies are finally able to scale complicated operations without constant oversight

Trend 2: Efficiency & Scalability Enhancements

The race to make AI models that not only work well but also run fast and lean is on in 2025. GPT-o3 Mini is a good example of a model that gets smaller without losing too much brainpower. It uses tricks like quantization, pruning, and distillation.

Instead of making more powerful GPUs and TPUs, hardware makers are secretly making ones that use less power. This makes each inference call less expensive.

What does this mean? Now that the models are smaller and cheaper, businesses of all sizes can use cutting-edge AI without going over their budgets.

These models are better for the environment because they use less energy. This goes along with the growing need to cut down on AI's carbon footprint.

As more people use it, the cost per query keeps going down. This means that advanced AI will be used for everything from personalized education platforms to real-time translation services, and it won't cost a lot of money.

Trend 3: Multi-Modal & Cross-Modal Generation

In 2026, generative AI technologies combine text, graphics, audio, and video. The generation of visual content from textual prompts is demonstrated by models such as DALL·E 3 and Sora. This integration is improved by hybrid designs using latent diffusion models and implicit neural representations. Control frameworks, such as ControlNet, further refine the synchronization across various data types.

Important factors consist of:

Seamless Modality Fusion: Providing the seamless integration of various data formats.

Coherent Outputs: Ensuring that all created content is logically consistent.

Technical Challenges: Figuring out how to best match the different modes.

You must overcome these challenges to generate multimodal content that is contextually relevant and effective.

Trend 4: AI Orchestration & Pipeline Automation

In 2026, the development of orchestration platforms will help manage multiple generative models within cohesive workflows. It is recommended to implement microservice architectures and create API ecosystems for this integration. Orchestration facilitates dynamic routing, which enables the assignment of tasks to the most appropriate models. It also enables the sharing of context among models and the implementation of error correction across AI agents.

Advantages include:

Enhanced Efficiency: Coordinating the activities of many AI components to optimize processes.

Scalability: The ability to easily enhance capabilities by including or modifying models.

Reliability: Making the system more stable by handling errors in an organized way.

These techniques generate dependable and effective AI-driven processes.

Trend 5: Democratization via Open-Source & Developer Toolkits

Generative AI is now more accessible due to the growing number of open-source frameworks in 2026, including Stable Diffusion WebUI and Hugging Face Transformers. Developers can effectively personalize models with the help of fine-tuning APIs and plug-and-play modules, which enable quick prototyping. When people get together in communities to share knowledge and resources, they greatly increase the rate of innovation.

Benefits include:

Accessibility: Reducing the challenges that developers face in implementing AI solutions.

Customization: Allowing customized solutions through adaptable modules.

Community Support: Promoting innovation through collaborative efforts and the sharing of knowledge.

This opens up the field of generative AI so that more developers can add to and develop from its progress.

Trend 6: Emergence of Advanced Reasoning Models

In 2026, we can see a big change in AI growth toward advanced reasoning models, which will make AI better at solving problems. Advanced reasoning models are AI systems designed to tackle complex, multi-step problems by breaking them down into smaller, manageable steps, enabling more effective problem-solving. Some of the most significant models are Grok-3 by xAI, R1 by DeepSeek, and o3 by OpenAI. DeepSeek's R1 model presented a fresh training framework based on reinforcement learning, which let the AI grow by means of feedback and self-generated incentive systems.

This method lowers dependency on large labeled datasets, which enhances the training efficiency. R1 also uses a "mixture of experts" design, turning on just relevant networks for particular tasks which optimizes computing resources. Likewise, the Grok-3 model from xAI is equipped with sophisticated reasoning capabilities, including modes such as "Think" for step-by-step problem-solving and "Big Brain" for complex tasks that require significant computational power. These developments highlight a more general trend in AI toward models that can independently improve their reasoning capabilities, indicating a substantial change in AI training methodologies.

Important aspects of reasoning models:

Reinforcement Learning Paradigm: It has been used to train large language models (LLMs) without labeled data using reinforcement learning (RL). However, DeepSeek's R1 model is different because it starts out using only RL and skips traditional supervised fine-tuning. This method allows R1 to foster the development of intricate reasoning skills through self-improvement, thus lowering its dependence on extensive labeled datasets. R1 additionally employs a "mixture of experts" architecture, which optimizes computational efficiency by activating only pertinent networks for particular tasks.

A mixture of Experts Architecture: R1 optimizes computational efficiency by activating specific neural networks that are relevant to the task.

Advanced Reasoning Modes: Grok-3 from xAI provides "Think" and "Big Brain" modes to enable customized techniques to solve problems.

Autonomous Improvement: These models serve as a prime examples of AI systems that are capable of self-improvement through innovative training methods.

Changes in Training Methodologies: The emphasis is shifting toward the development of AI models that are self-enhancing and efficient, which decreases the reliance on large-scale labeled datasets.

These improvements show how AI is always changing, with a focus on speed and self-learning in model creation.

Fundamental Technologies and Architectures

Generative AI has experienced significant progress as a result of the development of new optimization techniques and model architectures. You can have a better grasp of present capabilities and future directions by studying these basic technologies.

3.1 Transformer Architectures and LLM Innovations

Transformers changed AI by allowing models to process data in parallel, resulting in substantial enhancements to natural language processing. The foundation was made by early models like GPT-2, which were later improved by versions like GPT-4.

Key characteristics consist of:

Self-Attention Mechanisms: These let models decide how important different words are in a sentence, which helps them understand what's going on around them.

Multi-Head Attention: This mechanism lets the model focus on different parts of the input at the same time, so it can collect a wide range of data.

Chain-of-Thought Reasoning: Enhances the model's problem-solving capabilities by enabling step-by-step logical reasoning.

Recent advances have produced hybrid models combining vision and language processing, allowing tasks such as visual question answering and imagine captioning.

3.2 Diffusion Models & Latent Space Techniques

In generative AI, diffusion probabilistic models have emerged as strong tools, especially for the synthesis of audio and image data. These models function by simulating the data generation process as a progressive transformation of basic distributions into complex ones.

Benefits include:

Computational Overhead Reduction: Latent diffusion models are more efficient due to their operation in a compressed space.

Improved Control: The use of techniques such as classifier-free guidance and conditional diffusion enables the generation of outputs that are more precise.

These advancements make it possible to create top-notch content more efficiently and with more control.

3.3 Implicit Neural Representations (INRs) & Control Mechanisms

INRs create complicated structures using continuous functions to represent pictures and 3D sceneries. The use of coordinate-based multi-layer perceptrons (MLPs) allows INRs to create comprehensive representations with very little input data.

Uses consist of:

Conditional Image Synthesis: The generation of images is facilitated by the integration of control mechanisms, which are based on specific conditions or inputs. NVIDIA's Product-of-Experts Generative Adversarial Networks (PoE-GAN) are among the most successful models in this field, as they effectively integrate a variety of modalities, including text descriptions, drawings, and style references, to generate images that fulfill complex user requirements.

Text-to-3D Generation: This feature makes it easier to make 3D models from written details, which opens up new design and virtual reality options. Meshy is a tool that enables users to swiftly convert text prompts into detailed 3D models, which supports fast prototyping and creative exploration.

Real-Time Rendering: Allows effective scene rendering, which is useful for simulation and gaming applications. The Cosmos AI framework from NVIDIA helps to train autonomous vehicles and robots by creating photorealistic 3D models and environments, which enhances their ability to interact with the real world.

These methods help to provide more engaging and dynamic AI-generated content.

3.4 Model Compression, Quantization & Inference Optimization

It is becoming more and more important to optimize AI models for efficiency as their complexity increases. Some methods, like trimming, distillation, and mixed-precision training, help cut down on model size and boost performance.

Factors to be considered are:

Pruning: The process of eliminating superfluous parameters to optimize the model. The trimming and distillation process in NVIDIA's NeMo framework is shown by Meta's Llama-3.1-8B model being improved to a more efficient 4B version.

Distillation: The process of transferring knowledge from a large model to a smaller one without a substantial decrease in performance. The s1 model was developed by researchers from Stanford and the University of Washington using distillation, which resulted in competitive performance at a fraction of the cost and time.

Mixed-Precision Training: The use of lower precision calculations to expedite training and inference while maintaining their accuracy. PyTorch's automatic mixed-precision (AMP) feature is a prime example of this approach, as it optimizes training on compatible hardware and minimizes memory consumption.

It is important to find a balance between output quality and economy to make sure that models continue to work even when resources are limited.

These basic technologies and designs are what are speeding up progress in generative AI, making it possible for more advanced and efficient uses in many areas.

Popular Applications and Tools in Generative AI for 2025

In 2025, generative AI has become a critical component of a variety of industries, providing innovative solutions that improve decision-making, efficiency, and creativity. Here is a list of some of the most important tools and applications that are changing the world:

ChatGPT

ChatGPT o1: The o1 model, which was introduced in December 2024, was intended to address intricate issues by allowing for additional time to contemplate before responding. This planned processing lets the model look at its results and try out different approaches, which makes it more accurate. When compared to earlier models, the o1 model works far better in fields like mathematical reasoning, competitive programming, and scientific reasoning.

ChatGPT o3-mini: OpenAI introduced the o3-mini model in January 2025, which is a more cost-effective and efficient alternative in their reasoning series. Faster replies than its predecessor, o1-mini, and can match or exceed o1's accuracy with higher reasoning environments, optimized for coding, math, and scientific tasks. Different apps can use this model because it's available in both ChatGPT and the API.

Creative Content Generation

Adobe Firefly Video Model: Adobe's Firefly Video Model allows users to create videos from text prompts or images, changing elements such as camera angles and atmosphere. The tool offers designers a simple interface and fits perfectly with Adobe Creative Cloud.

DALL-E 3: Designed by OpenAI, DALL-E 3 pushes the edge of AI-driven art and design by excelling at producing detailed visuals from textual descriptions.

Automation and Software Development

GitHub Copilot: Driven by OpenAI's Codex, GitHub Copilot helps developers by immediately recommending code snippets and whole functions, hence optimizing the development process.

Moveworks: It provides AI-driven solutions for enterprise problems, such as automated IT support and employee communications, which improve productivity and efficiency

Healthcare and Scientific Research

Latent Labs: Latent Labs was started by Simon Kohl, a former DeepMind scientist. It uses AI to create synthetic proteins and wants to change the way drugs are found by speeding up the development of new medicines.

AI Writing and Communication Tools

Grammarly: Grammarly uses AI to help people write by making tips for grammar, style, and tone, which makes written conversation clearer and more effective.

Jasper: Jasper is an AI writing assistant that caters to the requirements of content creators and marketers by assisting in the creation of content across a variety of formats, such as social media posts, blogs, and marketing copy.

AI-Powered Design and Creativity Tools

AI Features of Canva: Canva uses AI to improve its design capabilities, enabling users to generate design elements and layouts more efficiently, which makes graphic design more accessible.

Runway: It offers a range of AI tools for creators, including video editing and special effects generating, which allows creative innovation.

Open-Source AI Frameworks

Hugging Face Transformers: Hugging Face provides an open-source collection of transformer models enabling a broad spectrum of natural language processing chores and encouraging community contribution.

Online User Interface: Stable Diffusion WebUI lets people explore AI-driven art production by providing an interface for creating graphics with diffusion models.

AI in Business Intelligence

AlphaSense: AlphaSense uses AI to analyze and extract insights from extensive business data, which helps companies in making well-informed decisions.

Amazon Q Business: Amazon's AI-driven business intelligence tool assists organizations in the analysis of data trends and the generation of actionable insights, which allows for strategic planning.

AI in Education

Khanmigo: Khan Academy Khanmigo is an AI-powered educator that offers personalized learning experiences, facilitating the comprehension of complex concepts through interactive lessons.

Socratic by Google: Socratic uses AI to assist students with assignments by offering resources and explanations that are customized to their inquiries.

AI for Customer Support

Ada: Ada provides AI-powered chatbots to answer consumer questions quickly and precisely, hence raising customer happiness.

Zendesk's Answer Bot: It uses AI to solve common customer problems, which makes help more efficient and reduces the amount of work that human have to do.

AI in Music and Audio Production

AIVA (Artificial Intelligence Virtual Artist): AIVA helps musicians and producers in the creative process by composing original music in a variety of categories.

Leading generative AI applications in 2025, these technologies individually help their respective disciplines to progress and together stimulate innovation across sectors.

Conclusion

In recent years, generative AI has made significant strides, introducing trends such as agentic AI, multi-modal generation, and efficiency enhancements. These changes have led to uses in healthcare, creative content, and business automation.

Looking ahead, OpenAI intends to unveil GPT-4.5, sometimes referred to as Orion, as its last non-chain-of-thought model. GPT-5 has been planned to combine several technologies, notably o3, to improve performance over different jobs. Generative AI has the ability to revolutionize sectors as it develops by improving creativity, automating challenging activities, and offering better insights. Maximizing advantages across many areas depends on addressing ethical issues and ensuring appropriate deployment.

Future AGI is a one-stop platform for designing, deploying, and monitoring AI pipelines, with built-in tools like Observe for live performance tracking and Protect for automated risk mitigation.

FAQs

How are AI models made more efficient and scalable?

What does multimodal generation involve?

What is AI orchestration?

What are advanced reasoning models?